The U.S. Department of Energy (DOE) Office of Science, Office of High Energy Physics (HEP), has announced funding for research that aims to transform the capabilities and efficiency of scientific tools and instrumentation by integrating artificial intelligence (AI) and machine learning (ML) with the hardware used to operate and control these technologies. This funding supports the development of energy-efficient AI hardware, the creation of foundational models for scientific applications, and the leveraging of AI and ML technologies for scientific research.

Two projects led by researchers at the DOE’s Lawrence Berkeley National Laboratory (Berkeley Lab) will receive funding through the Hardware-Aware Artificial Intelligence for High Energy Physics initiative. The first project aims to integrate AI into existing hardware to develop new control systems that enhance the capabilities of particle accelerators and colliders. The second seeks to improve the reliability and efficiency of particle detectors by creating intelligent sensor networks.

While AI-integrated accelerator control systems promise to deliver the necessary speed and precision to respond to complex, dynamic processes that occur on nanosecond-to-microsecond scales and span multi-mile distances in high-energy accelerators, intelligent sensor networks could provide adaptive, fault-tolerant detectors that improve data quality and flow, thereby enhancing the effectiveness of experiments.

These advancements promise to drive progress in accelerator physics, particle physics, and related fields.

“The integration of AI/ML into complex accelerator and detector systems is a new frontier where Berkeley Lab is particularly well positioned to make significant advances, and we greatly appreciate these enabling new awards from the DOE HEP office,” says Associate Laboratory Director for Physical Sciences at Berkeley Lab, Natalie Roe.

AI-enhanced control systems

High-energy particle accelerators and high-powered lasers are among the most complex and sophisticated instruments used in scientific research, advancing progress for high-energy physics (HEP) and many other fields. Controlling these systems, however, is a significant challenge due to stringent tolerance requirements, numerous adjustable parameters, and unpredictable environmental disruptions that can compromise their performance. Therefore, new tools could open up new opportunities by providing greater control over the precision and power of these systems, enabling the discovery of new physics and driving advances in scientific research.

Researchers from Berkeley Lab now aim to develop real-time, adaptive AI-driven control systems that provide the speed and precision needed to prevent instabilities, improving the performance and efficiency of future HEP colliders, increasing machine uptime, and laying the foundation for future AI-enhanced operations across the DOE Office of Science accelerator complex. The work will be led by Berkeley Lab’s Accelerator Technology & Applied Physics (ATAP) and Engineering Divisions, in collaboration with Fermi National Accelerator Laboratory (Fermilab) and SLAC National Accelerator Laboratory.

“Our goal is to develop scalable, AI-enhanced low-level stabilization systems for next-generation accelerators and high-power lasers,” explains Dan Wang, a research scientist in ATAP’s Berkeley Accelerator Controls & Instrumentation Program and co-principal investigator for the research. These technologies, says Wang, are susceptible to disturbances that can cause particle beam and laser instabilities, leading to wasted radio-frequency power, decreased beam quality, and reduced uptime.

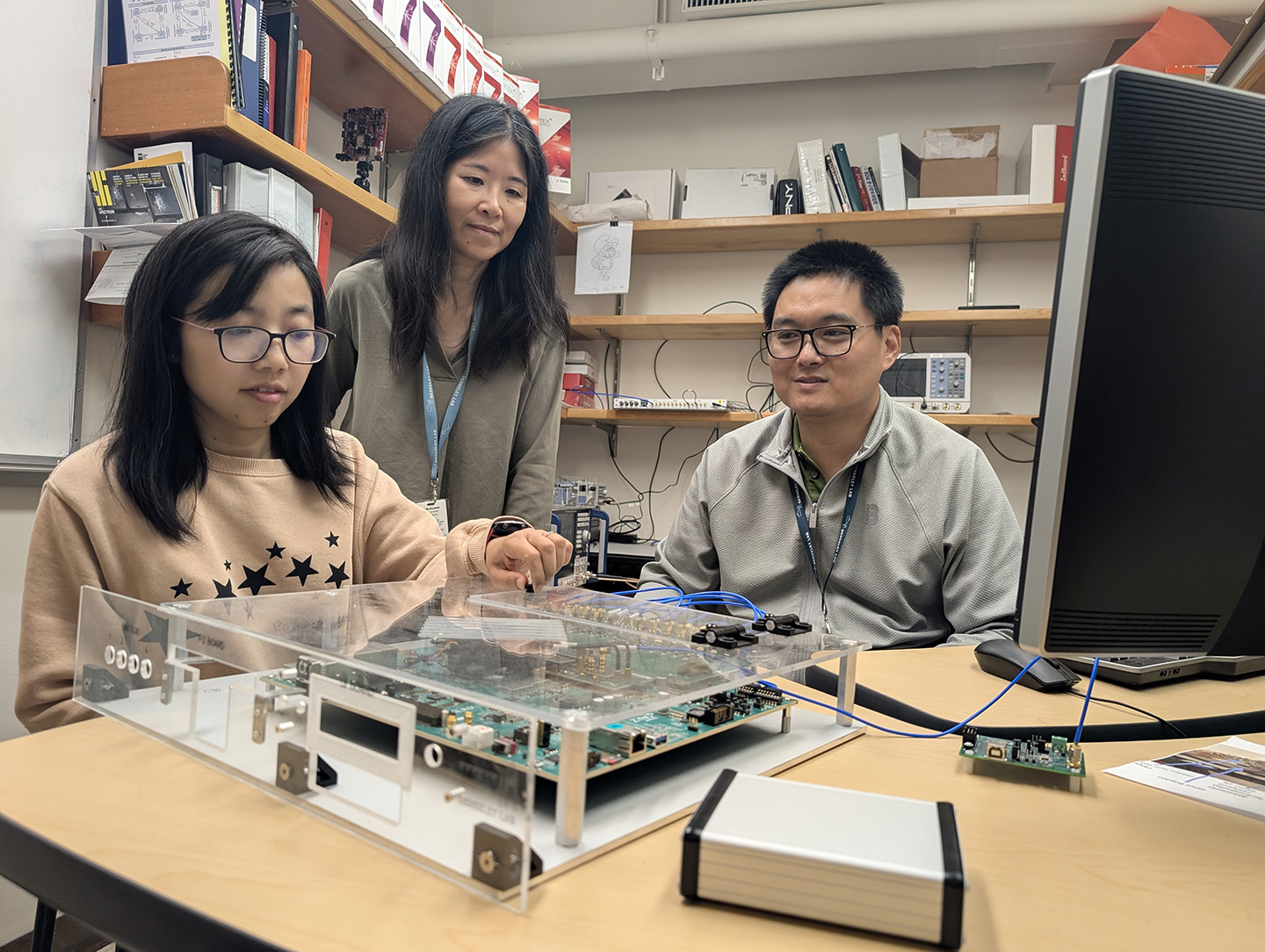

However, these systems exhibit nonlinear, time-varying dynamics that challenge traditional feedback methods. To address this, “We propose to integrate AI into embedded control systems to deliver fast, accurate, and real-time stabilization, especially for the accelerator’s superconducting radio-frequency (SRF) cavities,” says Qiang Du, a staff scientist in the Engineering Division and co-principal investigator. SRF cavities operate at cryogenic temperatures to efficiently accelerate particle beams with minimal energy loss and are essential components of accelerators.

Current AI models, however, struggle with noisy, unlabeled ML data and hardware limitations, impeding progress in high-performance control systems. To address these issues, the researchers plan to incorporate synchronized RF waveform data into the control loop and deploy ML models in distributed low-level RF architectures developed within Marble-Zest. This open-source control platform, developed at Berkeley Lab, offers a cost-effective and flexible solution for complex control tasks involving accelerator and laser systems. They will integrate and test this approach on the Linac Coherent Light Source (LCLS)-II at SLAC and the Proton Improvement Plan II, an upgrade to the Fermilab accelerator complex, leading to potential scalable deployment across other DOE facilities, including expanded collaboration with laser systems at the Berkeley Lab Laser Accelerator (BELLA) Center and the RF linac at LCLS.

The proposed research will address low-level RF (LLRF) controls, with a special focus on SRF cavities. While SRF cavities enable efficient acceleration, they are susceptible to disturbances that can cause instabilities, wasting power and degrading beam quality. By integrating machine learning into embedded LLRF systems, the project aims to achieve more precise and robust stabilization. The research will also seek to develop and demonstrate AI-enhanced controls for rapid stabilization of high-power lasers at BELLA.

According to Wang, the project will focus on integrating ML algorithms with electronic control circuits called field-programmable gate arrays (FPGAs). Widely used in accelerator facilities for electromagnetic field control, FPGAs offer the advanced timing and synchronization needed for the microsecond-scale, high-bandwidth feedback essential for precise, real-time beam and laser stability, which are crucial requirements for HEP research.

The team will also use digital twins and high-fidelity simulations to pre-train and validate ML models, allowing quick adaptation during deployment, lowering risk, and accelerating commissioning.

The work aims to demonstrate intelligent, real-time stabilization in accelerator LLRF control systems that are more resistant to environmental disturbances and changes in operational conditions. Furthermore, it could provide a model for AI-enhanced controls in accelerators and complex scientific instruments across DOE facilities, enabling the delivery of reliable and consistent ultrafast photon and particle beams for probing matter, novel light sources for medical diagnoses and treatments, and new applications in national security and industry.

“Creating the accelerators needed to unlock the next frontiers in particle physics requires unprecedented precision,” says ATAP Division Director Cameron Geddes. “This research is demonstrating methods of broad importance in using AI to drive physical systems—recognizing patterns and compensating for fluctuations to meet that need—for particle physics and society.”

Intelligent sensor networks

As the size and complexity of sensor arrays in particle physics experiments grow, the risk of single-point failures caused by manufacturing defects or extreme operating conditions—ranging from cryogenic temperatures to intense ionizing radiation—becomes a significant concern, especially when repairs or replacements are impractical or impossible. These failures hinder progress toward the next generation of detector technology, which is necessary for more advanced experiments to drive scientific discovery.

Drawing on expertise from a multidisciplinary team led by Berkeley Lab, new research aims to integrate ML-enabled algorithms directly into sensor hardware, creating a dynamic mesh communication system that can adapt to sensor failures in real time and yield highly fault-tolerant sensors. These intelligent networks could enhance the reliability and efficiency of sensor arrays used in particle detectors, such as those employed in the Deep Underground Neutrino Experiment (DUNE) and the ATLAS and CMS experiments at the Large Hadron Collider.

“I’m excited to see this close synergy between engineering and science teams to utilize AI and ML capabilities to advance accelerator controls and large sensor areas’ resilience and speed,” says Berkeley Lab Engineering Division Director and Chief Engineer, Daniela Leitner.

The project, involving scientists and engineers from the Lab’s Physics, Engineering, and Applied Mathematics and Computational Research Divisions, in collaboration with colleagues from the University of Texas at Arlington, will build upon the existing Hydra system, originally developed at Berkeley Lab for the DUNE Near Detector (ND), which has demonstrated fault-tolerant capabilities. Co-invented by Dan Dwyer, a senior scientist in the Physics Division, and Carl Grace, a staff scientist and head of the Electronics, Software, and Instrumentation Department in the Engineering Division, Hydra won a prestigious R&D 100 award in 2022, which recognizes the year’s 100 most innovative technologies.

Sensor systems like Hydra, however, require external computing and programming for reconfiguration. According to Maurice Garcia-Sciveres, a senior scientist in the Lab’s Physics Division and the principal investigator for the project, this limits Hydra’s ability to respond to transient faults or optimize data movement in real-time.

“Our approach involves using intelligent networks that respond dynamically and adaptively in real time, fully mitigating transient faults and improving data movement through the network, which reduces bandwidth requirements and power consumption.”

Building on the existing Hydra system, the researchers propose integrating on-chip intelligence, a significant upgrade over the current externally configured system. They plan to explore various chip designs that embed AI processing capabilities directly into the pixel sensor architecture by investigating both traditional digital logic and a biologically inspired in-pixel neural processing unit, which can efficiently perform ML tasks, enabling intelligent data processing at the source.

Central to the research will be the on-chip CMOS implementations of the ML algorithm, says Grace, who will focus on designing the intelligent Hydra system and managing chip testing. (CMOS technology, the dominant method for making integrated circuits, offers excellent scalability, enabling the integration of many components onto a single piece of silicon, which is essential for scaling up mesh architectures.)

According to Grace, the project will specifically investigate how to use AI “to identify network faults and inefficiencies and to optimize the sensor network topology in real time to mitigate component failures and improve data flow.”

The researchers plan to prototype two main network scales: a multi-chip network suitable for large-scale detectors, such as the DUNE ND, which utilize sensors on integrated circuit boards, and a network-on-chip for collider pixel detectors, where individual pixels serve as network elements. They will also use theoretical analysis and simulation to investigate ad-hoc wireless networks in three-dimensional space, which is relevant for a proposed smart dust sensor array.

By integrating AI directly into sensor array hardware, the project aims to develop self-adapting, fault-tolerant systems capable of withstanding extreme environments, improving data flow, and boosting the effectiveness of future HEP and particle physics experiments. It also aims to expand its applications in next-generation sensor technologies to address the challenges of ultra-low-mass tracking detectors for future colliders, such as the proposed Future Circular Collider Higgs Factory.

“I’m thrilled to see the successful Hydra concept advancing with machine learning capabilities that will unlock even greater power,” says Physics Division Director Nathalie Palanque-Delabrouille. “This evolution will open up exciting new application areas.”

For more information on ATAP News articles, contact caw@lbl.gov.